Standardization and normalization are both feature scaling techniques used a lot in machine learning. These two concepts are often used interchangeably in the machine learning world. However, there are significant differences between them. How do they differ from each other? First, let’s look at what feature scaling means.

Feature Scaling

It is a technique used to adjust the range of the features of data to a specific scale. The goal is to ensure that each feature contributes equally to the training by bringing them onto a common scale without distorting differences in the ranges of values. Two of the most common methods of feature scaling are normalization and standardization.

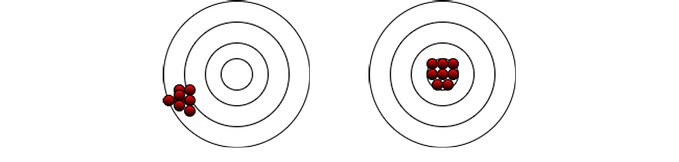

From the graphs, it can be deduced that the range of values of the data changes scaling and the distance between respective data points also changes. Algorithms that rely on distance calculations, like k-nearest neighbors (KNN) and k-means clustering, can measure similarities more fairly because all features contribute equally to the distance computations. Looking at the unscaled data, the horizontal distance is far longer than the vertical making it more dominant, making it seem more relevant than the vertical data point.

Normalization

Normalization is simply transforming a numerical data or feature to reside within a specific range, typically 0 and 1. You perform this transformation when you want your values to be on the same scale. For example, if we have house prices ranging from $50,000 to $1,000,000, normalization would rescale these values to fit between 0 and 1 which shrinks the magnitude of the values. The most common form of normalization is the min-max normalization.

This transformation is often suitable for algorithms that are sensitive to the magnitude(how big or small) of values and where the data does not necessarily follow a Gaussian distribution. Outliers will greatly affect the skewness of the data.

Intuitively normalization is supposed to perform better with these algorithms: K-Nearest Neighbor, K-Nearest Clustering, Tree-Based Ensemble algorithms like XGBoost and Neural Networks

x_normalized = x-x.min()/x.min()-x.max()

From the graph, it is evident nothing but only the scale of the data changes from 0–100 to 0–1.

Standardization

Like normalization, standardization also shrinks the scale, however, it shifts the data such that it has a mean of 0 and a standard deviation of 1. Standardization is also known as z-score normalization.

Standardization is more effective when the data follows a Gaussian distribution or when the algorithm you are using assumes a Gaussian distribution such as support vector machines, linear regression, and principal component analysis. By standardizing data, you’re not only centering it around zero but also scaling it by the standard deviation, which can make the data more “normal” in statistical terms.

Unlike normalization, standardization is less sensitive to outliers.

z = x-x.mean()/x.var()

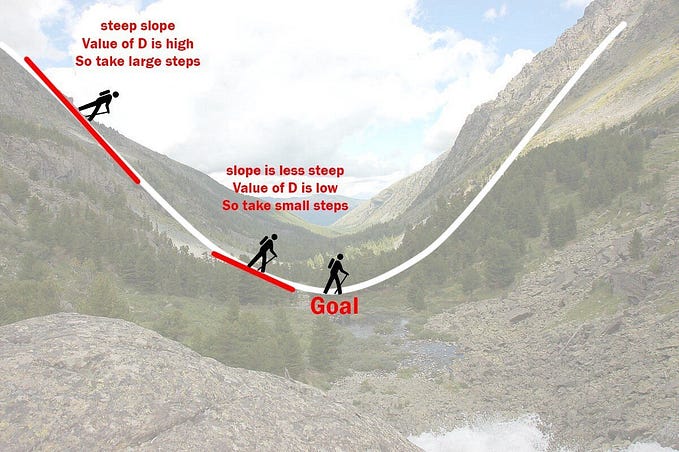

After standardization, we see from the graphs that only the scale shifts. Algorithms that rely on gradient descent (like linear regression, logistic regression, and principal component analysis(PCA)) converge faster because each feature contributes equally to the distance calculations, without any feature dominating because of its scale.

Note: Contrary to popular belief, standardization does not transform the data to a normal/gaussian distribution. To transform the shape of the data, log or square/cubic roots can be applied to the data.

Advantages of Feature Scaling

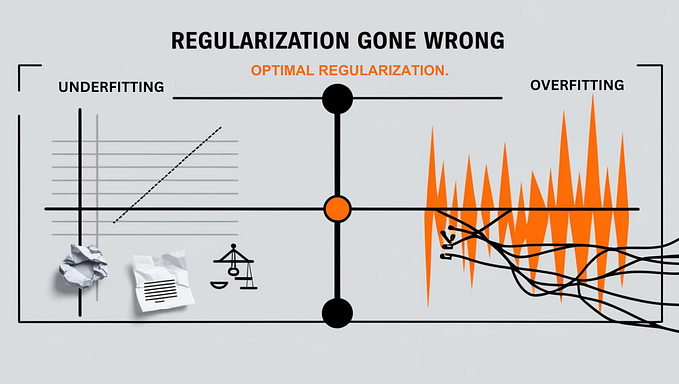

1. Speeds up Gradient Descent: Scaling helps gradient descent converge to the minimum more quickly by ensuring that all features contribute equally, avoiding skewed gradients due to features with larger scales.

2. Equal Importance to All Features: Ensures no single feature dominates in distance-based algorithms like SVM and KNN, allowing all features to have an equal impact on the model’s decisions.

3. Improves Algorithm Performance: Algorithms that assume data is normally distributed or that all features are on the same scale will perform better when these conditions are met, such as PCA.

Concretely, choosing between normalization and standardization depends on factors such as the nature of data and its distribution, the presence of outliers, the type of scale to use, etc. Most times it is better to test the two and choose the one that works better.